Carsten Moenning, Ph.D.

This is the final part of a two-part series on running AI applications on the edge in general and Computer Vision inference on an Intel Neural Compute Stick 2-accelerated Raspberry Pi 3, in particular.

Have a look at part 1 to obtain:

an overview of edge AI hardware accelerator and development board options,

a guide as to how to configure a Raspberry Pi with an on-board camera as an edge AI-ready device,

a guide as to how to install the Intel OpenVINO toolkit on the Raspberry Pi and, for example, your laptop, to get ready for performing Computer Vision inference on the Raspberry Pi.

This second part builds on steps 2 and 3. Whilst part 1 finished with an OpenVINO face detection demo application running on a Raspberry Pi, this post deals with developing your very own Computer Vision custom solution on such an edge device. More specifically, I will walk you through the custom implementation of a YOLOv3-tiny object detection model on a Raspberry Pi pre-configured as described in part 1. (Details on the YOLOv3 object detection model can be found here.) But, first, let’s have a closer look at the open source Intel OpenVINO toolkit in general and the OpenVINO toolkit for Raspbian OS, in particular. 🗹

The Intel OpenVINO toolkit

To quote the official OpenVINO toolkit documentation: “The OpenVINO™ toolkit is a comprehensive toolkit for quickly developing applications and solutions that emulate human vision. Based on Convolutional Neural Networks (CNNs), the toolkit extends CV workloads across Intel® hardware, maximizing performance.”

To quote further, the toolkit:

“enables CNN-based deep learning inference on the edge

supports heterogeneous execution across an Intel® CPU, Intel® Integrated Graphics, Intel® FPGA, Intel® Neural Compute Stick 2 and Intel® Vision Accelerator Design with Intel® Movidius™ VPUs

speeds time-to-market via an easy-to-use library of computer vision functions and pre-optimized kernels

includes optimized calls for computer vision standards, including OpenCV and OpenCL™.”

Amongst other things, this implies that the toolkit is particularly well-suited for the development of Computer Vision inference applications on edge devices such as a Raspberry Pi enhanced by an Intel Neural Compute Stick 2 USB accelerator stick featuring the Intel Movidius VPU.

⚡ In case you might be wondering: “OpenVINO” stands for “Open Visual Inference and Neural Network Optimization”, indicating its Deep Learning Computer Vision focus. ⚡

The main elements of the OpenVINO toolkit consist of:

the Model Optimizer,

the Inference Engine and

the Open Model Zoo.

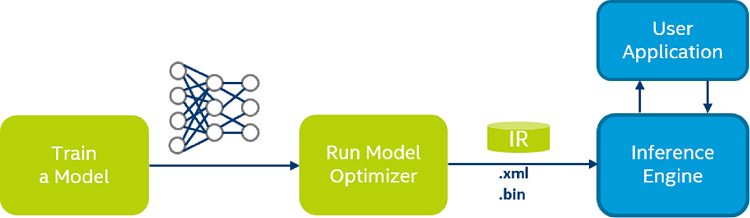

The Model Optimizer takes Deep Learning Computer Vision models pre-trained in the Caffe, TensorFlow, MXNet, Kaldi or ONNX frameworks and converts them into the OpenVINO Intermediate Representation (IR), a streamlined model representation optimised for model execution using the Inference Engine running on an edge device. The Inference Engine loads and infers these IR files using a common API for both CPU and GPU as well as VPU hardware.

The Open Model Zoo provides pre-trained models for free download that can be used to speed up the development process by not having to train one’s own model first, which would then need to be converted into the Intermediate Representation using the Model Optimizer next before being ready for use with the Inference Engine on the edge device under consideration.

When not using a pre-trained model from the Open Model Zoo, the typical workflow for deploying a deep learning model on the edge using the OpenVINO toolkit is shown below.

So, Open Model Zoo models come with the advantage of already having been converted into the OpenVINO Intermediate Representation, but what is the Intermediate Representation?

The IR describes the result of an input model, which has been optimised for edge inference with the help of one or multiple of the following techniques:

quantization, i.e. the reduction in numerical precision of the model weights and bias,

model layer fusion, i.e. the combination of multiple model layers into one,

freezing, i.e. the removal of metadata and operations which were only useful for model training (in the case of TensorFlow models only).

You are also perfectly free to use models from other sources, of course. These will have to go through step two of the OpenVINO workflow, though. That is, you will have to apply the Model Optimizer to the input model to produce its inference-optimised IR.

Once the IR is generated, its .xml (optimised model topology) and .bin (optimised model weights and biases) files can be fed into the OpenVINO Inference Engine running on the edge device for performance-optimised inference on the edge.

In fact, we already made our way through this workflow the easy way during the face detection example in part 1 of this two-part blog series: We downloaded a pre-trained face detection model from the Open Model Zoo. More specifically, since the OpenVINO Model Downloader is not available within the OpenVINO toolkit for Raspbian OS, we downloaded the IR files manually onto the Raspberry Pi and fed them into the Inference Engine for bounding box generation on an input image.

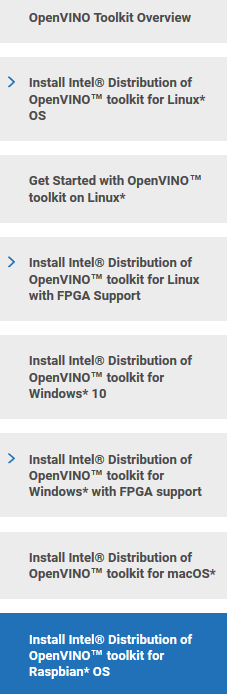

We will now take the slightly more challenging route of converting a pre-trained public model, the “tiny” version of YOLOv3 [2], into the IR via the Model Optimizer. This gets us to a crucial point: Since the OpenVINO Inference Engine represents the only OpenVINO main element included within the OpenVINO toolkit for Raspbian OS, the model conversion needs to be done outside of the Raspberry Pi environment, for example, on your main machine, which means that you will need to install OpenVINO on your main machine as well. With the help of the OpenVINO Linux, macOS and Windows installation guides, this should prove not to be too much of a challenge.

⚡ In the case of the OpenVINO toolkit for Windows, you could do without the Microsoft Visual Studio and CMake installation steps, since, in the following, we will be using the Model Optimizer only. However, if you want to have a fully-fledged OpenVINO installation on your Windows machine, it is, of course, strongly recommended to complete all of the installation steps. ⚡

You are now all set for the custom implementation of the YOLOv3-tiny [2] model on your Raspberry Pi.

Converting a pre-trained YOLOv3-tiny model into the OpenVINO Intermediate Representation

With the OpenVINO installation on your main machine out of the way, let’s generate an IR of the YOLOv3-tiny model. The network architecture of YOLOv3-tiny is execution-optimised for low-performance devices at the expense of reduced prediction accuracy: It uses only 19 convolutional layers instead of the 53 convolutional layers of the standard YOLOv3 model. As a consequence, it is much more execution-efficient than the standard YOLOv3 model, but also much less accurate with a mean average precision (mAP) of 33.1% compared to a mAP value of 51–57% of the standard YOLOv3 model.

To convert the YOLOv3-tiny model into the IR format, we will largely follow the OpenVINO guide for converting YOLO models.

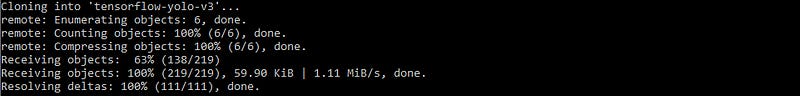

Step 1: Clone the following GitHub repository to obtain a TensorFlow-based YOLOv3-tiny implementation.

⚡ In principle, you can also use any other TensorFlow-based YOLOv3-tiny model implementation, but you may run into difficulties when trying to convert it into the Intermediate Representation using the steps below. ⚡

git clone https://github.com/mystic123/tensorflow-yolo-v3.git

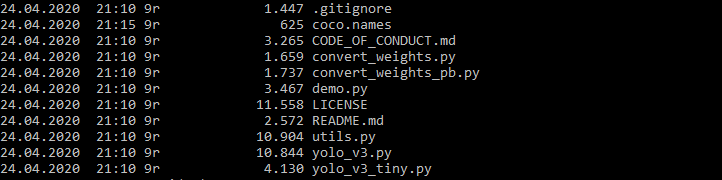

Step 2: Download a set of class labels in the form of, for example, coco.names, which includes 80 common object classes ranging from people to cars and animals, or provide your own set of class labels you want to do inference on using YOLOv3-tiny, subject to YOLOv3-tiny having been pre-trained on your classes of choice.

Step 3: Download pre-trained YOLOv3-tiny weights, or train YOLOv3-tiny yourself and use the resulting model weights.

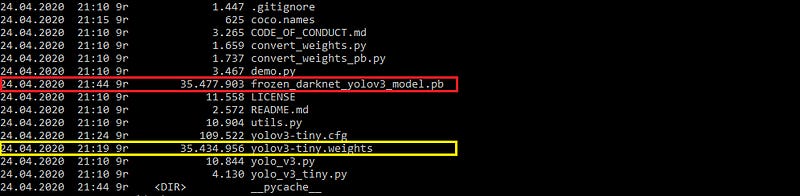

Step 4: Convert the YOLOv3 model to the protocol buffer file format (.pb) to obtain a simplified, i.e. “frozen”, model definition for inference.

python convert_weights_pb.py --class_names coco.names --data_format NHWC --weights_file yolov3-tiny.weights --tiny

The --tiny parameter at the end of the statemenet instructs the converter to generate the YOLOv3-tiny version of the frozen TensorFlow graph.

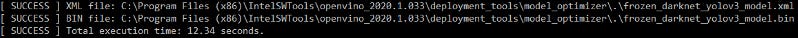

Step 5: Convert the frozen model defintion to its IR format. Don’t forget to execute the OpenVINO setupvars environment setup script located in $OPENVINO_INSTALL_DIR/bin/ prior to this step.

python mo_tf.py --input_model frozen_darknet_yolov3_model.pb --tensorflow_use_custom_operations_config <path/>yolo_v3_tiny.json --batch 1 --generate_deprecated_IR_V7

where the OpenVINO <path/> to the yolo_v3_tiny.json model configuration file should look something like this:

$OPENVINO_INSTALL_DIR/deployment_tools/model_optimizer/extensions/front/tf/

⚡ The --generate_deprecated_IR_V7 parameter forces the Model Optimizer to generate the older IR version 7. This is due to the compatability bug associated with the IR version 10 already mentioned in part 1. If not using this parameter, your converted model will fail during inference with somewhat intractable error messages. ⚡

⚡ If you want to run OpenVINO demos using your converted model, please note that you may need to add the --reverse_input_channel option, since OpenVINO demos typically expect the colour channels to be in BGR rather than the usual RGB order. Consult the official OpenVINO demo documentation to check the demo’s specific input requirements. ⚡

As a result, we obtain the edge-optimised YOLOv3-tiny network topology (.xml) and weight and bias terms (.bin) IR files.

To test the validity of the generated IR files, you may want to run the OpenVINO object detection YOLOv3 demo on your main machine. Please take into account the --reverse_input_channel remark above in this context.

The result for a sample input video can be shown below. As to be expected when using YOLOv3-tiny for inference, the frame rate easily hits near real-time inference throughout on a non-Edge device at the expense of partially rather poor car detection and bounding box accuracies.

To conclude this preparation stage, simply transfer the IR files to your Raspberry Pi. 🗹

This leaves us with incorporating the converted YOLOv3-tiny model in a custom-developed application running on the Raspberry Pi.

Object detection application using YOLOv3-tiny on the edge

Our application consists of three main elements, which are, generally speaking, representative for most OpenVINO-based edge applications:

Inference Engine model loading

App-based input processing and inference request

App-based inference result and output processing

This is reflected in the following code structure of our Raspberry PI application:

Helper class for loading of the IR into the Inference Engine and performing inference

Core routine consisting of video and camera input preprocessing, frame extraction and inference and video output processing

YOLOv3-tiny model parameterisation class

We will use the Python wrapper of the Inference Engine API. The codebase can be found here on GitHub. The elements concerning YOLO inference processing largely coincide with, for example, the corresponding parts within object_detection_demo_yolov3_async.py from the OpenVINO YOLO showcase. So, to keep things easy and to the point, in the following, I discuss the OpenVINO-specific elements only.

Let’s have a quick look at the YOLOv3-tiny parameterisation in the form of Python class YoloParams first. Its values correspond to the original darknet parameterisation, so no surprises here in principle. Choosing the “right” set of anchors (line 9–10), however, is as much an art as it is a science and the particular set of anchor values used here is likely not to be the best choice for the particular object detection scenarios under consideration further below. So, in case you, too, have been wondering about YOLO anchor parameter selection, please have a look here.

The OpenVINO-specific methods provided by the helper class Network are more relevant in the context of this two-part blog series: The load_model method instantiates an object of the Inference Engine Core class, IECore (line 24 below). This class provides an abstraction layer across the supported devices hiding any device-specifics. As a consequence, the methods of this class typically expect the device one is actually working with as a parameter, which is why a device parameter is included in the load_model list of parameters. Since we are dealing with the Intel Neural Compute Stick 2 as inference device, this parameter’s default value is set to “MYRIAD”.

The YOLOv3-tiny object detection model in the form of the previously created IR files are then read into an IENetwork object (line 29). The IENetwork class supports the reading and manipulation of model parameters such as batch size, numerical precision, model shape and various layer properties. This functionality is used to set the batch size to 1 (line 31), since we will be performing inference on one frame at a time. This single input requirement is also asserted in line 33 by looking at the model’s input topology. If successful, an executable version of the model is instantiated in line 36 and loaded into the MYRIAD device for inference. Finally, lines 39–40 create iterable objects for the model input and output layers with the help of the IENetwork object.

⚡ Since we are dealing with a MYRIAD device, to keep things simple, I have opted against the usual safety check for unsupported network layers, which is required when using a CPU device for visual inference: Since the Model Optimizer preprocessing going into the IR file generation is device-agnostic, it is possible that the optimized neural network might contain layers not supported by the specific device CPU being used. It therefore needs to be checked for any unsupported layers prior to doing CPU-based inference and further processing is actually to be exited to prevent arbitrary results or system crashes if unsupported layers are encountered. Since the the above lines of code support CPU-based inference also and if you want to experiment with inference using the Raspberry Pi’s CPU instead of an Intel Neural Compute Stick 2, have a look at, for example, object_detection_demo_yolov3_async.py for the additional layer support-related code lines required. ⚡

As far as the other methods within class Network are concerned, the get_input_shape method uses the IENetwork object to return the shape of the model’s input layer.

Method async_inference takes an input request ID and performs an asynchronous inference for that request. Since we are not doing truly asynchronous processing, but rather push one frame at a time to the Inference Engine, the request ID is set to 0. Truly asynchronous processing would require different IDs for different inference requests pushing additional frames to the Inference Engine, whilst it might still be processing previous requests. These difference request IDs then allow to distinguish between the various inference results and to perform the individually required further processing.

Since we are performing asynchronous processing, the wait method supports waiting for this process to be completed prior to trying to retrieve any inference result. Note that this status check needs to be done with reference to the correct request ID, so, in this case, 0.

Method extract_output then returns the inference output generated by an inference request against the executable network. The request index again needs to be set to 0 to reference the correct inference request. The wait function’s value -1 makes sure that the status is returned once the process is completed. It effectively blocks processing until a certain timeout elapses or the result becomes available, whichever comes first.

With the various OpenVINO-related helper methods out of the way, this leaves the discussion of the video inference core routine, infer_video, within the tinyYOLOv3.py file of the GitHub repository, which uses the helper methods to take video or camera input in order to generate on-screen and video file inference results.

The OpenVINO-related initialisation contents of infer_video consists of the Inference Engine initialisation with the help of the Network class (line 7 below), followed by the model’s input shape retrieval (line 18).

The net object is subsequently used to launch an asynchronous inference request on an individual, pre-processed video frame (line 3 below) and, having waited until the asynchronous inference is completed (line 8), retrieves the inference result using the extract_output helper method (line 9). This output is then parsed as applicable in the YOLOv3 model case.

⚡ To write the inference result to video later on within the infer_video method, I use the OpenCV VideoWriter class with an .avi output file extension and the MP42 codec: codec = cv2.VideoWriter_fourcc("M","P","4","2"). The search for the “right” combination of codec and file extension for a particular device setup can be somewhat frustrating. See, for example, this article by Adrian Rosebrock of Pyimagesearch on this matter. It gives you a number of pointers as to what might be potentially valid combinations for your particular setup. Also, it recommends installing the Python bindings for FFMPEG on your Raspberry Pi. This has worked rather well in my case. Still, the fact that an .avi file extension in combination with the MP42 codec worked for my setup after having installed the FFMPEG library does not mean that it will work for yours and you might find yourself having to go through a number of combinations until you obtain valid video output. ⚡

⚡ Please note that the infer_video method also makes use of the video package within the imutils package of Pyimagesearch to compute the frames per second (FPS) supported by the processing pipeline. ⚡

We are now ready to give our application a test run on the Raspberry Pi using both a MP4 input video and the Raspberry Pi’s on-board camera scenario.

In the case of a MP4 input file, the following command launches the application, reads in the input file, produces an on-screen window showing the inference results in the form of bounding boxes around detected objects, including the corresponding labels and writes these results to an .avi output file:

python tinyYOLOv3.py --m "frozen_darknet_yolov3_model.xml" --i "test_video.mp4" --l "coco.names"

where you might have to add your specific paths to the model, input video and class label file parameters. The resulting output video for the test file [1] used is shown below. The Raspberry Pi manages a respectable frame rate of around 3 FPS due to the help of the Intel Neural Compute Stick 2. Without this hardware accelerator stick, processing slows down to a crawl with the Raspberry Pi CPU mostly being occupied with decoding the MP4 input. Not unexpectedly, the performance benefit of the YOLOv3-tiny model comes at the price of low object detection accuracy: Although persons and bicycles are detected reasonably well, other objects such as dogs and balls are not detected at all.

When it comes to inference on a live camera feed, the launch command looks rather similar with the exception of no input video file being provided, of course, so that the application defaults to camera input processing instead, i.e.:

python tinyYOLOv3.py --m "frozen_darknet_yolov3_model.xml" --l "coco.names"

Again, you might have to add your specific paths to the model and class label file parameters. The frame rate increases to between 4 and 5 FPS. This is due to the MP4 input file processing being replaced with the less computationally demanding camera feed processing. Similarly to the input video scenario, certain objects such as the remote control and the coffee mug are detected pretty reliably, whilst, for example, the desk chair is not detected at all.

Depending on the application scenario, these relatively low levels of accuracy might be good enough. If not so, more accurate but frequently less execution-efficient models such as MobileNet single-shot detectors could be utilised as well. 🗹

It’s a wrap.

Starting with the overview of Edge AI hardware accelerators and development boards and both Raspberry Pi configuration and Intel OpenVINO installation guides, including an object detection demo application, in part 1, we have managed to work our way all the way to a custom-implementation of a YOLOv3-tiny-based object detection application processing video file or camera input on a Raspberry Pi edge device featuring an Intel Neural Compute Stick 2. The codebase can be found on GitHub.

Although the Intel OpenVINO package comes with the odd intricacy, overall it represents an excellent and straightforward way of implementing AI applications on edge devices, especially so when making use of pre-trained and pre-converted models from the Open Model Zoo.

Thanks to these fairly recent developments, a realm of Edge AI application scenarios has opened up too numerous to mention and ranging from, for example, smart home or smart drone devices to gesture recognition and visual sensor-based automation scenarios. All that is needed are relatively low-cost devices such as the Raspberry Pi and an hardware accelerator, the free OpenVINO library, some imagination and a little bit of perseverance.

References

[1] Y. Xu, X. Liu, L. Qin, and S.-C. Zhu, “Cross-view People Tracking by Scene-centered Spatio-temporal Parsing”, AAAI Conference on Artificial Intelligence (AAAI), 2017

[2] J. Redmon and A. Farhadi, “YOLOv3: An Incremental Improvement”, Technical Report, arXiv:1804.02767, April 2018